What Are AI SOC Agents? And How Do They Work – Detailed Guide

Modern Security Operations Centers (SOCs) are drowning in alerts and over burdened due to a shortage of skilled analysts. AI SOC Agents emerge as a new solution: these are autonomous, AI-driven systems that act like virtual Tier-1 analysts. Instead of rigid, rule-based scripts or static playbooks, an AI SOC agent “reasons through problems, adapts to new situations, and works continuously without human intervention”.

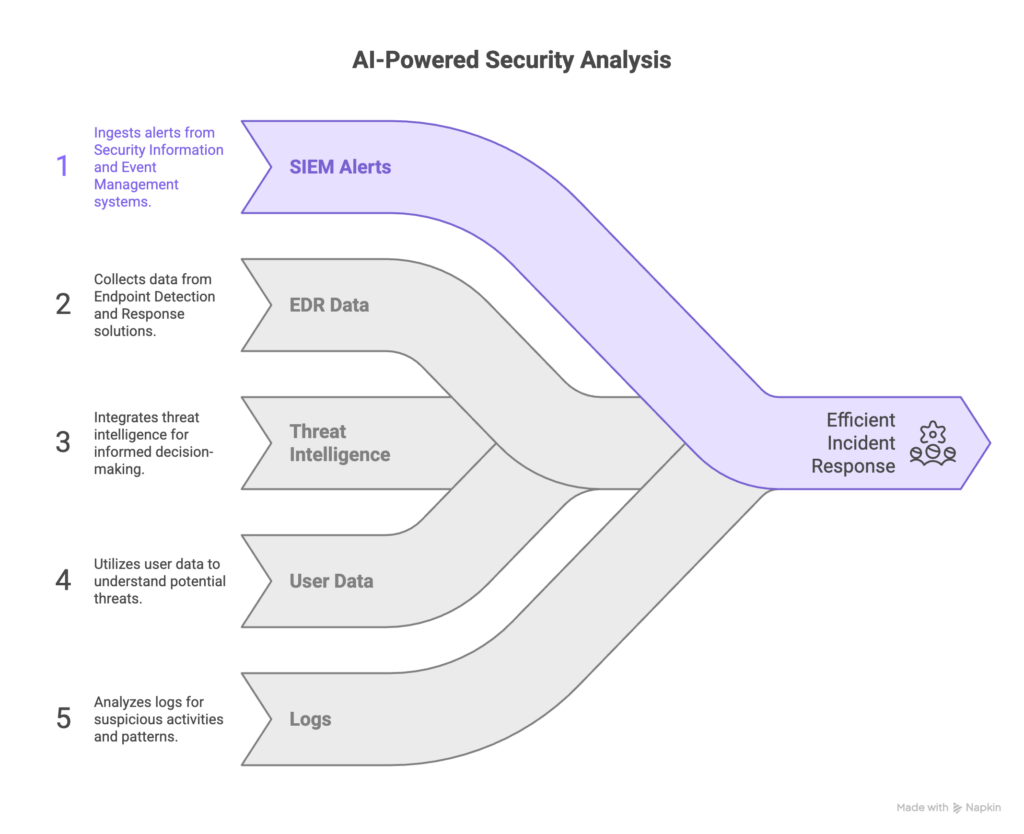

In practice, that means the agent can ingest alerts from your SIEM and EDR, gather context (threat intelligence, user data, logs), and triage and investigate incidents in natural language – effectively mimicking a human analyst at machine speed.

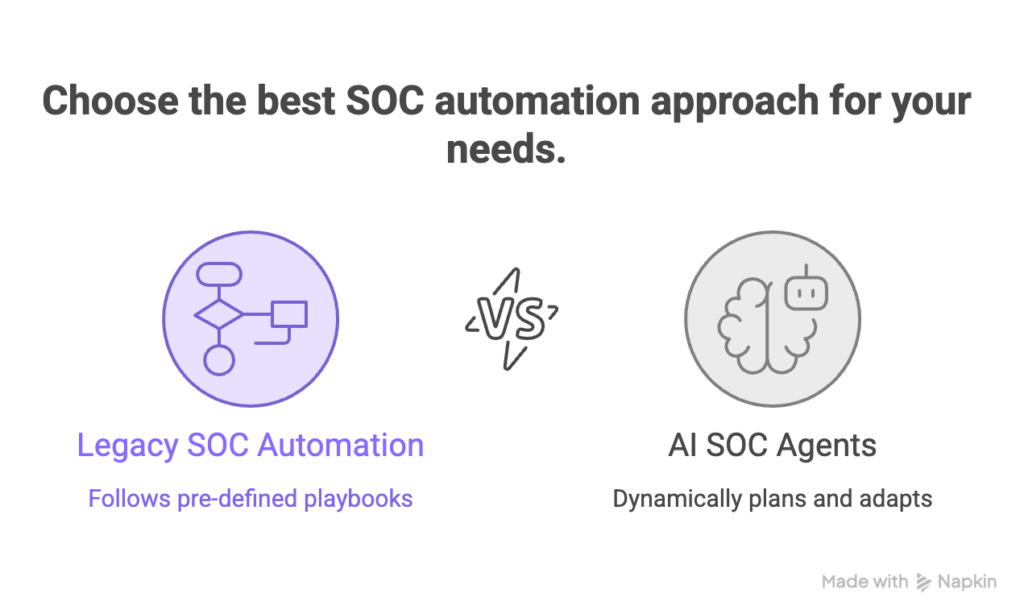

In other words, an AI SOC agent handles much of the repetitive alert triage and enrichment that would normally burden analysts, freeing them to focus on strategic, high-value work. Unlike legacy SOC automation (SOAR) that follows pre-defined playbooks, AI agents use agentic AI models to dynamically plan, adapt, and explain themselves. The net effect is a smarter, more flexible SOC workflow: agents reduce noise, prioritize real threats, and even perform basic response actions autonomously, all while providing transparent reasoning.

Table of Contents

How AI SOC Agents Differ from Traditional SOC Automation

Adaptive reasoning vs. rigid rules: Traditional SOC tools (SIEM, SOAR) rely on static rules or playbooks. They execute exactly what they’re programmed to do and often break on novel scenarios. By contrast, AI SOC agents employ advanced machine learning and natural language models that reason through each alert contextually. They can handle unexpected cases (“edge cases”) without requiring manual rule updates.

Continuous learning vs. one-time setup: SOAR automations must be hand-coded and repeatedly maintained. AI agents, on the other hand, continuously learn from new data and analyst feedback. Over time, their decision-making improves and adapts to your organization’s specific environment and threat patterns.

Proactive investigation vs. reactive execution: SOAR is inherently reactive – it waits for an alert and then runs a fixed workflow. AI agents take a more proactive stance. For example, they can be triggered on all incoming alerts and even initiate their own queries (such as hunting IOCs) without waiting for a scripted step. This means they can uncover subtler threats and correlating signals that rigid automations would miss.

Natural-language output vs. technical logs: Traditional tools generate tickets or logs that analysts must interpret. AI agents translate their findings into clear, human-readable summaries. They can explain why they classified an alert as benign or malicious, providing analysts with audit trails and context.

In short, AI SOC agents are fundamentally different from traditional automation. They are autonomous “thinking” tools, not just “doing” tools. As industry experts note, agentic AI “builds a chain of logic to explain why an alert matters — or doesn’t — before an analyst ever sees it,” without requiring any manually coded playbooks. This shift unlocks the efficiency and coverage that SOAR was supposed to provide, but rarely achieved.

AI SOC Agent Architecture & Capabilities

Behind the scenes, AI SOC agents employ a modern, distributed SOC architecture built for speed and scale. Traditional SOCs often funnel all data through a central SIEM, which then requires analysts to go between tools. AI-driven architecture decentralizes this process. An AI agent platform typically connects directly to your data sources and tools (EDRs, identity systems, cloud logs, etc.) rather than relying solely on a central SIEM. This “AI-native” model creates a unified control plane: agents ingest and process data in parallel, applying intelligence closer to the source.

In practice, an AI SOC agent is like a team of specialized micro-agents (Agent Swarms) working in concert. Each agent has a narrow focus (for example, one handles endpoint triage, another handles identity alerts). A central orchestration engine routes alerts to the appropriate agent. The agents then query multiple tools and databases simultaneously – spanning SIEMs, EDR/endpoint systems, identity directories, and threat intelligence feeds. This distributed, agentic architecture eliminates the old “swivel-chair syndrome” where analysts flip between dozens of consoles.

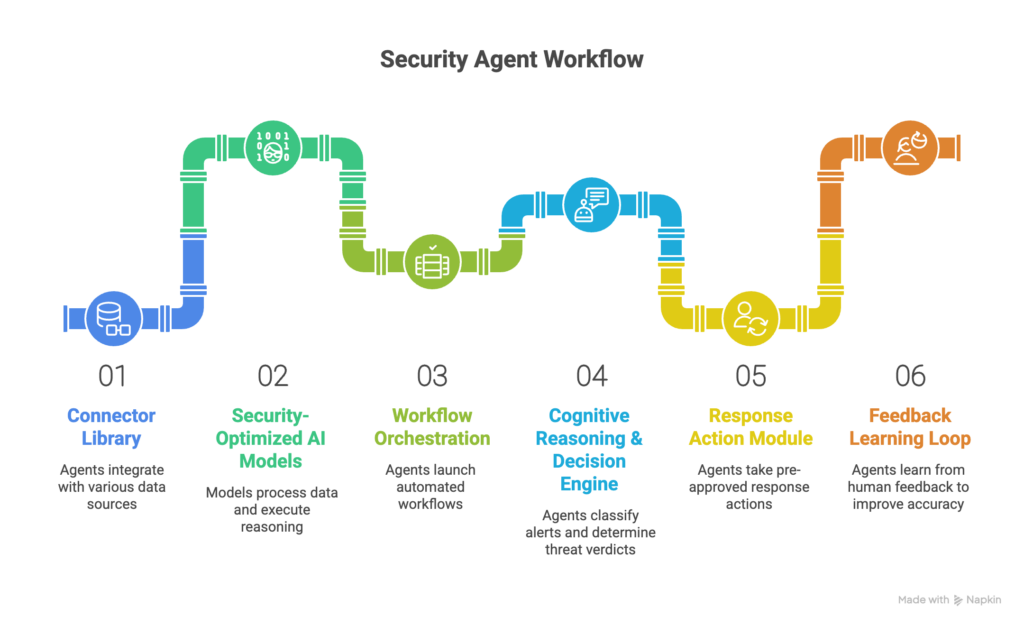

Key architectural features include:

Connector library: Agents use out-of-the-box integrations/APIs to pull in data from SIEMs (e.g. Splunk, Microsoft Sentinel), EDR platforms (CrowdStrike, SentinelOne, Defender), email systems, identity providers (Azure AD, Okta), and threat intel sources. They also push results into ITSM and chat tools (ServiceNow, Jira, Slack) to create or update tickets seamlessly.

Security-optimized AI models: At their core, agents employ LLMs or machine learning models that have been fine-tuned for security contexts. These models parse logs, process threat intelligence, and execute reasoning over evidence. They may include a knowledge base of CVEs, MITRE ATT&CK, and organizational policy rules to ground their decisions.

Workflow orchestration: Upon an alert trigger, the agent launches a “playbook” workflow of automated steps – but these steps are dynamic, not hard-coded. For example, the agent might fetch a process tree from the endpoint, query a threat hash on VirusTotal, check the user’s role in Active Directory, and then pass all that context to its reasoning engine. The entire chain is orchestrated automatically.

Cognitive reasoning & decision engine: Using specialized security LLMs, the agent reasons over the gathered facts to classify the alert. It weighs business context (e.g. a finance user running admin scripts is more suspicious than an IT user doing the same) and arrives at a threat verdict (benign, suspicious, malicious).

Response action module: If the agent has high confidence in a verdict, it can even take pre-approved response actions automatically – such as isolating a host, disabling a user account, or blocking an IP address. All actions are logged for audit.

Feedback learning loop: After handling an alert, the agent can learn from human feedback. If an analyst reviews and corrects a decision, that information is fed back to retrain the agent’s models. This continuous learning refines accuracy over time.

This flexible, agentic architecture means AI SOC agents can analyze data faster and more comprehensively than any single tool or human. They distribute processing across multiple AI modules, each querying different parts of the security stack in parallel. According to industry reports, such an architecture turns a SOC into an always-on, data-driven operation: agents can investigate threats “instantly, without breaks, and in parallel” – something human teams simply can’t match.

Core Functions: Triage, Investigation, Enrichment & Decision-Making

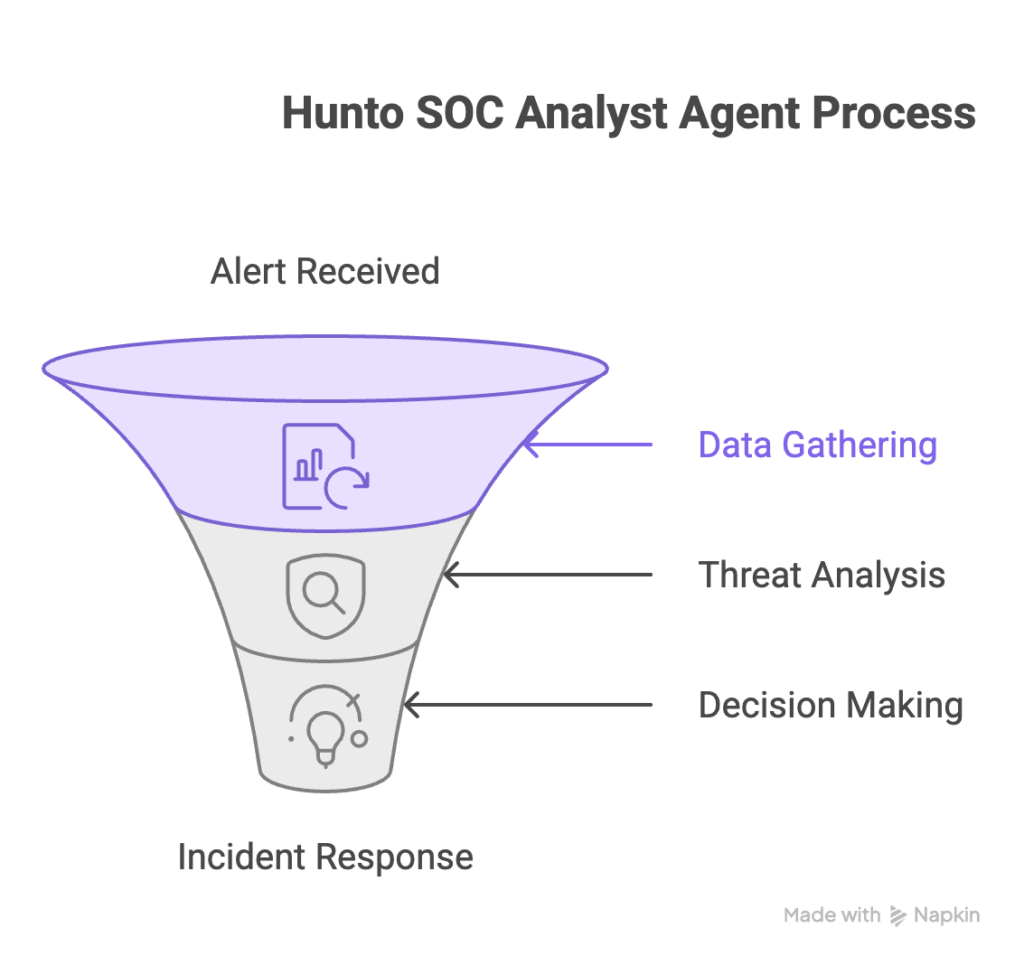

AI SOC agents specialize in four core tasks, essentially performing the duties of a Tier-1 analyst at machine speed:

Alert Triage:

When an alert is generated (e.g. an endpoint detects suspicious behavior), the agent immediately ingests it from the SIEM or EDR. The agent automatically categorizes the alert type (email, endpoint, cloud, etc.) and enriches it with context. This initial triage includes tagging the alert’s severity and priority. Crucially, the agent filters out obvious false positives right away. For example, if a security update triggered the alert or a known benign script ran, the agent can automatically mark it as “no threat” and dismiss it.

In practice, vendors report auto-closing 50–80% of false alarms through intelligent triage. By doing this, AI agents dramatically unclog the alert queue so analysts only see the alerts that truly matter.

Contextual Investigation:

If an alert warrants deeper look, the agent launches a multi-faceted investigation. It queries multiple data sources in parallel – spanning endpoints, network logs, cloud services, identity management systems, and more. For instance, it might retrieve the process tree of a malicious process, check the hash on VirusTotal, look up the user’s recent activity, and search for related events in the SIEM. Specialized security LLMs then correlate and reason over all these findings.

This is similar to a human building an incident timeline. The agent reconstructs the attack path, identifies lateral movement (if any), and scopes the blast radius. If an event connects to a known campaign or CVE, that insight is added as enrichment. The result is a rich investigative dossier for each alert – something even a fast analyst would struggle to compile manually.

Threat Intelligence Enrichment:

Throughout investigation, AI agents automatically incorporate external intelligence. They check IP/domain reputations, scan for malicious signatures, and cross-reference indicators of compromise (IOCs) from threat feeds. For example, if the alert involves a suspicious executable, the agent queries malware databases and threat intel platforms. It also applies organizational context: it knows that if the affected asset is a critical database server, the alert’s priority should be higher. By layering in threat intel and business context, the agent ensures that decisions are informed. In short, the agent fills in the blanks before any human sees the case, delivering a fully enriched incident package (logs, user and asset info, reputation scores) on the ticket.

Decision-Making and Action:

After gathering all evidence, the agent must decide on a verdict. Using its reasoning engine, it classifies the alert as benign, suspicious, or malicious. This decision takes into account both technical signals and business factors. For example, in one illustration the agent notes: “User is marketing staff, but running admin scripts…” and “Hash matches known Emotet variant” – combining these clues to score high confidence of a true threat. If the agent deems the alert malicious, it escalates the case to higher-tier analysts with a recommended action. If benign, it auto-closes it (“Auto-Close”) and documents why, freeing the queue. Critically, the agent explains its reasoning in plain language. Every verdict comes with a natural-language summary and justification (e.g. “False positive – routine task; no malicious behavior found”), providing transparency.

If confidence is very high and pre-approved by policy, the agent can take immediate action (e.g. isolate the host, block the IP, disable the account). Otherwise, it updates the existing ticketing system or SIEM with its findings and recommendation. The end result is that every alert has a clear, documented outcome – whether it’s closed as noise or escalated as a genuine incident – along with all relevant evidence.

In summary, AI SOC agents automate the entire alert-handling workflow: ingestion, triage, enrichment, investigation, decision, and reporting. They do this continuously (24/7) and in parallel across thousands of alerts, using specialized reasoning to mirror what an experienced analyst would do, but at machine scale and consistency.

Integration with the Existing Security Stack

A key strength of AI SOC agents is that they build on top of your current tools – they don’t replace them. In practice, deploying an agent involves integrating it with your SIEM, EDR, identity platform, and other sources so it can ingest alerts and query data. As Gartner notes, AI agents “sit on top of the tools and data you already run”. Most solutions come with prebuilt connectors. For example, a typical SOC Analyst Agent integrates with SIEMs like Splunk, Azure Sentinel or Sumo Logic, EDRs such as CrowdStrike Falcon, SentinelOne and Microsoft Defender, and ITSM/ticketing systems like ServiceNow, Jira or Zendesk. It may also connect to email gateways, cloud logs, vulnerability scanners, and threat-intel platforms to pull context automatically.

This tight integration means that the AI agent can both read from and write to your existing security tools. It consumes alerts from your SIEM or EDR via webhooks (see Step 1 in Hunto’s workflow) and writes back its findings – for example, updating a ticket in ServiceNow or annotating the alert record. It essentially turns disconnected tools into a unified, AI-driven process.

However, integration is not entirely plug-and-play. It requires sensible planning: you must ensure the agent has timely access to the right data streams and alert feeds. That may mean flattening some silos (e.g., forwarding cloud and network alerts into the SIEM) and verifying APIs or connectors. The good news is that once set up, the agent can leverage the broadest possible intelligence. It will pull in threat intel feeds, check asset inventories, and use identity lookups to inform each investigation. For example, it might query who an alert’s user is, what group memberships they have, or if their account has recently been compromised.

In short, AI SOC agents are designed to augment, not replace, your security stack. They interoperate with SIEMs, EDRs, identity systems, cloud services, threat intel databases, and ticketing channels. Because of this, your existing tools keep delivering value: alerts still come from your SIEM or EDR, but now an AI agent automatically handles them using the data those tools provide.

Business Benefits of AI SOC Agents

Adopting AI SOC agents can transform a security operation in measurable ways:

Faster Response and Detection

AI agents slash mean time to detect (MTTD) and respond (MTTR). For example, where a human might take 30 minutes on average to triage an alert, an agent can do it in seconds. Agents work continuously (24/7) and process multiple alerts in parallel. This means threats are escalated or contained far more quickly. A vendor claims an agent reduced triage time from 30 minutes to 30 seconds per alert, and others report going from weeks of backlog to near-real-time coverage.

Massive False Positive Reduction

By automatically weeding out benign alerts, agents dramatically lower noise. Industry case studies show agents can resolve 50–99% of alerts automatically as false positives. One company achieved 95–99% reduction in false alarms after deploying agentic triage. The result is that analysts spend far less time on junk alerts. Fewer false positives also mean the team can focus on the real threats. As Prophet AI summarizes, agents “filter through massive alert volumes, suppress false positives, and surface the most critical threats”.

Analyst Productivity & Workload Relief:

With mundane tasks offloaded, human analysts work on higher-value activities like threat hunting, incident strategy, and detection tuning. This reduces burnout and turnover. Gartner specifically notes that AI SOC agents enable “security teams to handle more operational work with the same team,” extending human capacity. In one example, organizations using an AI agent saw significant drops in analyst burnout because repetitive investigations were automated. Junior analysts can also contribute sooner: AI agents can handle simple cases, flattening the learning curve.

Consistent, Auditable Investigations:

Unlike manual triage, agents follow standardized, documented procedures every time. This consistency improves auditability and compliance. Each alert investigation yields a written summary and evidence package in the ticket system. Stakeholders (and auditors) can see exactly why the agent made a decision, thanks to transparent reasoning. This uniformity also means process improvements apply across the board – there’s no “analyst A vs. B” variation. In Hunto’s case, every investigation “follows the same rigorous process”.

Scalability and Coverage

An AI agent doesn’t fatigue or ignore alerts. It will systematically review all alerts (especially low-priority ones that humans might skip). This means no alert is overlooked. If an organization suddenly has a flood of alerts (for example, during an attack), the AI can scale up its throughput dynamically. In effect, it turns a fixed analyst team into a much larger virtual team. As one vendor notes, coverage goes from partial to 100% of alerts.

Faster Analyst Training:

New hires ramp up faster because AI agents supply guidance. For example, if a junior analyst reviews an agent’s output, they see a complete analysis rationale and can learn from it. Over time, the agent embodies institutional knowledge that would otherwise exist only in experienced heads. Gartner calls this “built-in capture of institutional knowledge” that doesn’t walk out the door.

Strategic Focus & ROI:

By accelerating routine work, senior talent can spend more time on strategic initiatives like tuning detections, threat hunting, and improving defenses. The strategic benefit of doing more with the same resources often translates to clear ROI. Some vendors cite cost savings in the millions – for instance, one homepage claims ~$5M+ in savings per enterprise (through automation gains). Even without specific numbers, the qualitative return is evident: faster threat response, fewer breaches, and lower headcount needs.

In summary, AI SOC agents bring business-level advantages: speed, scale, and consistency. They improve key SOC KPIs like mean time to detect/respond (MTTD/MTTR) while reducing false-positive rates. They boost team productivity and morale and provide leaders with measurable gains on their security investment.

AI SOC Agents vs. Traditional SOC Tools

It’s helpful to directly compare AI agents with legacy SOC technologies:

AI SOC Agent Vs SIEM:

A SIEM collects logs and generates alerts, but it doesn’t make decisions or take actions. AI agents augment SIEMs by automatically consuming those alerts. Where a SIEM says “here’s an event,” the agent takes the next step: it interprets the alert, enriches it, and decides what to do. In this sense, an AI agent sits on top of your SIEM and multiplies its effectiveness.

AI SOC Agent Vs SOAR:

As noted, traditional SOAR platforms automate based on fixed playbooks. They excel at simple, scripted tasks (like notifying on a known phishing alert), but they don’t reason. AI agents, in contrast, replace these brittle workflows with intelligent reasoning. Prophet Security puts it this way: unlike SOAR, agentic AI “adapts on its own without manual rules or intervention” and delivers efficiency that SOAR never realized.

For example, SOAR might require you to define a playbook for “new user login alert,” whereas an AI agent inherently understands user behavior baselines and can catch anomalies without explicit rules. In essence, most organizations have discovered that SOAR under-delivered on the promise of a lights-out SOC. As one analysis explains, legacy SOAR “struggled with the more complex, judgment-driven ‘thinking’ tasks”. AI SOC agents tackle precisely those thinking tasks that SOAR could not.

AI SOC Agent Vs Manual Tier-1

In a traditional SOC, Tier-1 analysts manually triage alerts with playbooks or checklists. This is slow and error-prone. An AI agent automates that entire Tier-1 role autonomously – essentially acting as a 24/7 Tier-1 analyst. Unlike humans, agents don’t get fatigued or distracted, and they can process tens of alerts at once.

They also work faster; whereas humans might take minutes to piece together context, agents have enriched data in hand in seconds. This empowers human analysts to move from “alert ferrymen” to incident investigators.

AI SOC Agent Vs Threat Hunting:

Traditional threat hunting is periodic and human-driven. AI agents can continuously hunt in the background by applying ML models to find anomalies on the fly. This makes threat detection more proactive.

Overall, AI SOC agents complement and extend the capabilities of existing tools. They are best thought of as an intelligent layer atop your SOC stack. They leverage SIEM, SOAR, EDR, and other tools rather than replacing them outright, but they transform what those tools can achieve by injecting AI-driven cognition into the process.

Limitations & Common Misconceptions

While AI SOC agents are powerful, it’s important to understand their limits and address realistic concerns:

Not a Drop-in Human Replacement:

A common fear is that AI agents will make SOC analysts obsolete. The truth is the opposite: agents are meant to augment analysts, not replace them. They handle repetitive alert triage, but human judgment is still needed for nuanced decisions and containment. Gartner explicitly emphasizes that these agents are an assistant, not a replacement. Leaders must maintain oversight and accountability. In practice, organizations use AI agents to offload low-level work and let analysts focus on strategy, complex incidents, or contexts that require human insight.

Data Quality and Integration Need:

AI agents rely on the quality and breadth of data available. If your telemetry is sparse or siloed, the agent’s effectiveness suffers. Successful deployments almost always require ensuring that your logs, alerts, and context feeds are comprehensive and well-integrated. In other words, garbage in, garbage out still applies. One guideline from experts: start with clean, high-fidelity alert sources for initial deployment, then expand gradually.

Transparency & Trust:

Another concern is “black box” decision-making. AI agents from reputable vendors are designed to be transparent: they articulate “why” behind each decision. During evaluation, ensure any agent provides clear explanations (for example, listing key evidence that led to a malicious verdict). Also, keep humans in the loop at first. Many teams run AI agents in shadow mode initially – the agent makes decisions behind the scenes while humans review – to build trust. A strong practice is to verify and validate the agent’s findings regularly.

False Positives & Hallucinations:

While AI reduces false alarms, it can never be perfect. AI models can hallucinate or make mistakes, especially if confronted with very unusual scenarios. That’s why rigorous testing and continuous feedback are vital. In practice, you should review samples of agent-closed cases to ensure accuracy. If errors are found, the agent can be retrained or tuned. It’s also wise to have a “stop” mechanism: for instance, if an alert is really low confidence, it can default back to human review. Vendors often build in confidence scoring to decide when to auto-respond versus when to simply alert a human.

Complex Licensing and Cost:

Some organizations are concerned about pricing. AI SOC agents are still a new category, and vendors may price per-alert or per-investigation. It’s important to model the expected alert volume and usage to understand costs. That said, the productivity gains typically far outweigh licensing fees, but budget planning is necessary.

Maturity and Market Variation:

This is an emerging space (recently identified by Gartner and others), and product maturity varies. Some solutions are essentially security-directed chatbots, while others, like Hunto or Prophet, provide fully autonomous workflows with verified case outputs. It’s crucial to evaluate any agent on real-world use cases in your environment. Look for case studies or proofs-of-concept that demonstrate actual SOC improvements, not just promise.

In sum, AI SOC agents are not a silver bullet – they come with caveats. However, with proper implementation and oversight, their advantages can vastly outweigh their limitations.

Give that you treat AI agents as governed infrastructure:

- implement safeguards,

- monitoring, and

- human review to manage any new risks they introduce.

Hunto AI and Its SOC Analyst Agent

Hunto AI is pioneering AI SOC agents and various AI Agents for cybersecurity. Hunto’s solutions cover various tasks (risk monitoring, takedown, etc.), but most notably we offer the Hunto SOC Analyst Agent – an autonomous Tier-1 analyst.

The SOC Analyst Agent from Hunto is a “Tier-1 Autonomous SOC Analyst” that automates alert triage and investigation. In practice, this agent integrates with leading SIEMs (Splunk, Sentinel, etc.), EDR platforms (CrowdStrike, SentinelOne, Microsoft Defender), and ticketing systems (ServiceNow, Jira) as required.

When an alert arrives (for example, “Suspicious PowerShell execution on Host X”), the Hunto agent springs into action. It simultaneously queries multiple sources – endpoint data, user identity info, network logs – to gather facts, much like a human would. It checks IP and domain reputation, examines the process tree on the host, and pulls threat intelligence on any matching hashes. It even reviews user history to see if the user often runs such scripts. Using specialized security LLMs, it then thinks through the evidence.

For example, it might reason: “Is this admin user running a routine script on schedule? – likely a false alarm. Is it a new script from a random temp folder? – likely malicious.” Each hypothesis leads to a decision.

The output from Hunto’s agent is highly transparent. It will classify the alert and take action: either auto-close it as a false positive or escalate it as a genuine threat. In the above example, if labeled malicious, the agent escalates the case (#4921) and tags it with MITRE ATT&CK techniques (e.g. “T1059 Lateral Movement”). It also generates a human-readable investigation summary for Tier-2 analysts. This summary explains all the key findings and reasoning, so a human reviewer can quickly understand what happened.

Capabilities of Hunto’s SOC Analyst Agent include:

Autonomic Triage:

The agent auto-closes the majority of low-value alerts, reportedly cutting alert volume by 80%+ by filtering out false positives.

Contextual Enrichment:

Before presenting a case to an analyst, it gathers all relevant data – logs, reputation scores, user context – so no one has to pull context manually.

Decision Transparency

Every classification comes with a plain-language explanation. The agent explicitly states why it judged an alert as benign or malicious.

Automated Response Actions

If extremely confident and allowed by policy, the agent can execute containment – for example, isolating an infected host or disabling a compromised account.

Continuous Learning:

The agent learns from feedback. If analysts overrule a decision, the system uses that feedback to refine its models.

In real-world terms, Hunto’s SOC Analyst Agent can reduce triage time from ~30 minutes to ~30 seconds per alert. It also covers 100% of alerts (no low-priority cases get ignored). This means an organization can handle a far higher alert volume without extra headcount. This automation “alleviates alert fatigue” and ensures every alert is investigated.

integrating with popular SOC tools and providing clear, actionable output, it shows how an AI agent can be deployed in a live environment to yield the speed and consistency SOC teams need.

Frequently Asked Questions (FAQ)

Will AI SOC Agents replace our human analysts?

No. Think of agents as your smart assistants, not replacements. Experts stress that AI agents are meant to augment the SOC team, not eliminate it. They take over repetitive triage, which allows your analysts to focus on high-value tasks (like hunting, strategy, and complex incidents). In fact, by reducing burnout and freeing up time, agents often make the human analysts’ work more impactful. Remember, “today’s capabilities of AI SOC agents are not a replacement for human operators” – human judgment and oversight remain essential.

How can we trust an AI agent’s decisions?

Trust is built with transparency and validation. Leading solutions provide glass-box explanations of every decision. For each alert, the agent should output not just “yes/no” but a rationale: what signals it found and why it drew its conclusion. This allows analysts to audit the logic.

During deployment, many teams run the agent in parallel with humans (shadow mode) to verify its accuracy. Continuous review and tuning are key. Importantly, human analysts always have final say and the ability to override the agent’s decision. It’s best practice to have robust logging and an audit trail for all agent actions, so you can trace and trust its operations.

Will the agent make mistakes or “hallucinate”?

AI isn’t perfect. Agents can occasionally misclassify, especially when encountering novel or ambiguous scenarios. However, they typically reduce errors compared to humans because they catch subtle indicators that a person might miss. Moreover, top solutions include confidence thresholds: if the model isn’t highly confident, the case is handed to a human.

To mitigate mistakes, continuously monitor agent performance metrics. If you notice false negatives or positives slipping through, provide that feedback back into the system. Over time, the agent will learn from corrections and improve accuracy.

How do we integrate an AI agent into our SOC?

Integration generally involves connecting the agent to your alert and data sources via APIs or webhooks. Most AI agent platforms offer native connectors to common tools (e.g. Splunk, Sentinel, CrowdStrike, ServiceNow, Jira, etc.). You will need to configure those so alerts flow into the agent and so the agent can query context (like pulling user info from an identity store).

Good integration planning is critical: ensure your SIEM and log systems are capturing the data the agent needs (network logs, process logs, cloud logs, etc.). If your data is distributed, you may need to set up streaming or forwarding so the agent has a unified view. While initial setup takes work, once integrated the agent automatically leverages those connections for every alert.

How much does it cost, and is it worth it?

Pricing models vary by vendor (per alert, per investigation, etc.). What matters is the ROI in analyst time saved and breaches avoided. Consider metrics like analyst hours gained and faster incident containment. Many organizations find the cost quickly offset by the efficiency gains (for example, reducing headcount growth or reassigning expensive SOC engineers to more strategic projects).

It’s wise to pilot a small scope first to measure impact on your own KPIs (alert reduction rate, MTTD, MTTR, etc.) before a full rollout.

Can AI agents handle all types of alerts?

Modern agents cover a broad range: endpoint (EDR) alerts, cloud/Azure/AWS alarms, identity/authentication alerts, email/phishing reports, network IDS events, etc. In the examples above, agents triage everything from suspicious login attempts to malware detections to strange process activity. However, they work best on digital alerts where data is structured.

For highly unstructured cases (like social engineering incidents) human analysts still excel. Over time, vendors are expanding coverage (e.g. adding specialized email or phishing agents), so capabilities are rapidly growing.

What kind of oversight do we need?

Human oversight is essential. Recommended practice is to keep senior analysts in the loop, at least initially. This can mean requiring a human sign-off for any automated actions, or regularly sampling the agent’s closed cases for quality control. Also establish a process for updating the agent’s logic – e.g. whenever a new type of threat emerges or there’s a false positive, feed that back to improve the agent. Good governance (clear policies on what the agent is allowed to do, reporting on its performance, etc.) ensures the system remains accountable. In short, treat the AI agent as critical infrastructure: implement alerts for its failures and logs for audit compliance.

Is this just hype, or are organizations actually using AI SOC Agents?

It’s early, but it’s real. Gartner has recognized AI SOC Agents as an “Innovation Trigger” on its 2025 Hype Cycle. Leading-edge SOCs have begun trials and even production deployments of these agents. For example, several cybersecurity companies (7AI, Prophet Security, Hunto AI, Intezer, Red Canary) now offer commercial AI SOC platforms.

Early case studies report impressive gains (e.g. 95% false positive reduction, triage times cut by 99%). So while caution and due diligence are important, AI SOC agents are moving from concept into practical use in sophisticated environments.

In summary, AI SOC Agents are designed to accelerate and standardize your SOC workflow, not to remove humans from the loop. By understanding their strengths and planning around their limitations, organizations can safely leverage these agents to significantly boost their security operations.